Download AWS Certified Machine Learning Engineer - Associate.MLA-C01.ExamTopics.2026-02-09.78q.vcex

| Vendor: | Amazon |

| Exam Code: | MLA-C01 |

| Exam Name: | AWS Certified Machine Learning Engineer - Associate |

| Date: | Feb 09, 2026 |

| File Size: | 2 MB |

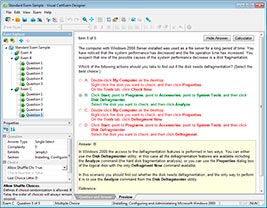

How to open VCEX files?

Files with VCEX extension can be opened by ProfExam Simulator.

Discount: 20%

Demo Questions

Question 1

An ML engineer needs to implement a solution to host a trained ML model. The rate of requests to the model will be inconsistent throughout the day.

The ML engineer needs a scalable solution that minimizes costs when the model is not in use. The solution also must maintain the model's capacity to respond to requests during times of peak usage.

Which solution will meet these requirements?

- Create AWS Lambda functions that have fixed concurrency to host the model. Configure the Lambda functions to automatically scale based on the number of requests to the model.

- Deploy the model on an Amazon Elastic Container Service (Amazon ECS) cluster that uses AWS Fargate. Set a static number of tasks to handle requests during times of peak usage.

- Deploy the model to an Amazon SageMaker endpoint. Deploy multiple copies of the model to the endpoint. Create an Application Load Balancer to route traffic between the different copies of the model at the endpoint.

- Deploy the model to an Amazon SageMaker endpoint. Create SageMaker endpoint auto scaling policies that are based on Amazon CloudWatch metrics to adjust the number of instances dynamically.

Correct answer: D

Question 2

An ML engineer is using a training job to fine-tune a deep learning model in Amazon SageMaker Studio. The ML engineer previously used the same pre-trained model with a similar dataset. The ML engineer expects vanishing gradient, underutilized GPU, and overfitting problems.

The ML engineer needs to implement a solution to detect these issues and to react in predefined ways when the issues occur. The solution also must provide comprehensive real-time metrics during the training.

Which solution will meet these requirements with the LEAST operational overhead?

- Use TensorBoard to monitor the training job. Publish the findings to an Amazon Simple Notification Service (Amazon SNS) topic. Create an AWS Lambda function to consume the findings and to initiate the predefined actions.

- Use Amazon CloudWatch default metrics to gain insights about the training job. Use the metrics to invoke an AWS Lambda function to initiate the predefined actions.

- Expand the metrics in Amazon CloudWatch to include the gradients in each training step. Use the metrics to invoke an AWS Lambda function to initiate the predefined actions.

- Use SageMaker Debugger built-in rules to monitor the training job. Configure the rules to initiate the predefined actions.

Correct answer: D

Question 3

A company is planning to create several ML prediction models. The training data is stored in Amazon S3. The entire dataset is more than 5 ТВ in size and consists of CSV, JSON, Apache Parquet, and simple text files.

The data must be processed in several consecutive steps. The steps include complex manipulations that can take hours to finish running. Some of the processing involves natural language processing (NLP) transformations. The entire process must be automated.

Which solution will meet these requirements?

- Process data at each step by using Amazon SageMaker Data Wrangler. Automate the process by using Data Wrangler jobs.

- Use Amazon SageMaker notebooks for each data processing step. Automate the process by using Amazon EventBridge.

- Process data at each step by using AWS Lambda functions. Automate the process by using AWS Step Functions and Amazon EventBridge.

- Use Amazon SageMaker Pipelines to create a pipeline of data processing steps. Automate the pipeline by using Amazon EventBridge.

Correct answer: D

Question 4

An ML engineer needs to use AWS CloudFormation to create an ML model that an Amazon SageMaker endpoint will host.

Which resource should the ML engineer declare in the CloudFormation template to meet this requirement?

- AWS::SageMaker::Model

- AWS::SageMaker::Endpoint

- AWS::SageMaker::NotebookInstance

- AWS::SageMaker::Pipeline

Correct answer: A

Question 5

A company has a large collection of chat recordings from customer interactions after a product release. An ML engineer needs to create an ML model to analyze the chat data. The ML engineer needs to determine the success of the product by reviewing customer sentiments about the product.

Which action should the ML engineer take to complete the evaluation in the LEAST amount of time?

- Use Amazon Rekognition to analyze sentiments of the chat conversations.

- Train a Naive Bayes classifier to analyze sentiments of the chat conversations.

- Use Amazon Comprehend to analyze sentiments of the chat conversations.

- Use random forests to classify sentiments of the chat conversations.

Correct answer: C

Question 6

A company wants to predict the success of advertising campaigns by considering the color scheme of each advertisement. An ML engineer is preparing data for a neural network model. The dataset includes color information as categorical data.

Which technique for feature engineering should the ML engineer use for the model?

- Apply label encoding to the color categories. Automatically assign each color a unique integer.

- Implement padding to ensure that all color feature vectors have the same length.

- Perform dimensionality reduction on the color categories.

- One-hot encode the color categories to transform the color scheme feature into a binary matrix.

Correct answer: D

Question 7

A company uses Amazon Athena to query a dataset in Amazon S3. The dataset has a target variable that the company wants to predict.

The company needs to use the dataset in a solution to determine if a model can predict the target variable.

Which solution will provide this information with the LEAST development effort?

- Create a new model by using Amazon SageMaker Autopilot. Report the model's achieved performance.

- Implement custom scripts to perform data pre-processing, multiple linear regression, and performance evaluation. Run the scripts on Amazon EC2 instances.

- Configure Amazon Macie to analyze the dataset and to create a model. Report the model's achieved performance.

- Select a model from Amazon Bedrock. Tune the model with the data. Report the model's achieved performance.

Correct answer: A

Question 8

A company has a large, unstructured dataset. The dataset includes many duplicate records across several key attributes.

Which solution on AWS will detect duplicates in the dataset with the LEAST code development?

- Use Amazon Mechanical Turk jobs to detect duplicates.

- Use Amazon QuickSight ML Insights to build a custom deduplication model.

- Use Amazon SageMaker Data Wrangler to pre-process and detect duplicates.

- Use the AWS Glue FindMatches transform to detect duplicates.

Correct answer: D

Question 9

An ML engineer has trained a neural network by using stochastic gradient descent (SGD). The neural network performs poorly on the test set. The values for training loss and validation loss remain high and show an oscillating pattern. The values decrease for a few epochs and then increase for a few epochs before repeating the same cycle.

What should the ML engineer do to improve the training process?

- Introduce early stopping.

- Increase the size of the test set.

- Increase the learning rate.

- Decrease the learning rate.

Correct answer: D

Question 10

A company has deployed an XGBoost prediction model in production to predict if a customer is likely to cancel a subscription. The company uses Amazon SageMaker Model Monitor to detect deviations in the F1 score.

During a baseline analysis of model quality, the company recorded a threshold for the F1 score. After several months of no change, the model's F1 score decreases significantly.

What could be the reason for the reduced F1 score?

- Concept drift occurred in the underlying customer data that was used for predictions.

- The model was not sufficiently complex to capture all the patterns in the original baseline data.

- The original baseline data had a data quality issue of missing values.

- Incorrect ground truth labels were provided to Model Monitor during the calculation of the baseline.

Correct answer: A

HOW TO OPEN VCE FILES

Use VCE Exam Simulator to open VCE files

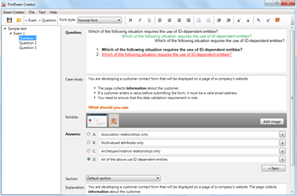

HOW TO OPEN VCEX AND EXAM FILES

Use ProfExam Simulator to open VCEX and EXAM files

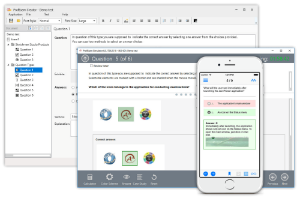

ProfExam at a 20% markdown

You have the opportunity to purchase ProfExam at a 20% reduced price

Get Now!